Problem

Wikidata validates statements against the constraints from the associated property.

The constraints are user-defined and one of the possible constraint types for text values is a regex pattern.

Due to the impact of potentially malicious regexes, the MediaWiki PHP backend for Wikidata does not use PHP's preg_match. Instead, we need to isolate this in some way.

The current workaround uses the SPARQL query service, which incurs a lot of overhead (ping, TCP, HTTP, SPARQL parsing, query engine preparation), which results in bad timing of the format constraint even for benign regexes. We should investigate whether we can check regexes more locally. However, the mechanism should be tightly restricted in order to avoid denial-of-service attacks via malicious regexes.

Solution

Use shellbox https://www.mediawiki.org/wiki/Shellbox / https://www.mediawiki.org/wiki/Manual:Shell_framework

! In T240884#7092435, @tstarling wrote:

Yes, the RPC endpoint was added to support this use case. Use MediaWikiServices::getInstance()->getShellboxClientFactory()->getClient()->call().

https://github.com/wikimedia/shellbox/blob/master/src/Client.php#L49-L69

Acceptance Criteria:🏕️🌟

- Have regex checking functionality provided by shellbox

- Have a flag for using SPARQL or Shellbox REGEX evaluation

- Have a plan for deployment of shellbox regex checks to wikidata.org (deploying on beta,test,prod Wikidata, and the question of the big bang deployment vs gradual rollout, e.g. using some ratio / % vs some other method)

Notes:

We should reach out to SREs / service ops to tell them about the increased load on the shellbox service before deploying the new regex code.

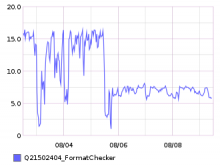

Based on https://grafana.wikimedia.org/d/000000344/wikidata-quality?viewPanel=10&orgId=1&from=now-7d&to=now this could see peaks of 250k regex checks in a minuite? Though more regular peaks would be around 50k.