HTTP/2 performance revisited

By Timo Tijhof

Hello, HTTP/2!

In 2016, the Wikimedia Foundation deployed HTTP/2 (or “H2”) support to our CDN. At the time, we used Nginx- for TLS termination and two layers of Varnish for caching. We anticipated a possible speed-up as part of the transition, and also identified opportunities to leverage H2 in our architecture.

The HTTP/2 protocol was standardized through the IETF, with Google Chrome shipping support for the experimental SPDY protocol ahead of the standard. Brandon Black (SRE Traffic) led the deployment and had to make a choice between SPDY and H2. We launched with SPDY in 2015, as H2 support was still lacking in many browsers, and Nginx did not support having both. By May 2016, browser support had picked up and we switched to H2.

Goodbye domain sharding?

You can benefit more from HTTP/2 through domain consolidation. The following improvements were achieved by effectively undoing domain sharding:

- Faster delivery of static CSS/JS assets. We changed ResourceLoader to no longer use a dedicated cookieless domain (“bits.wikimedia.org”), and folded our asset entrypoint back into the MediaWiki platform for faster requests local to a given wiki domain name (T107430).

- Speed up mobile page loads, specifically mobile-device “m-dot” redirects. We consolidated the canonical and mobile domains behind the scenes, through DNS. This allows the browser to reuse and carry the same HTTP/2 connection over a cross-domain redirect (T124482).

- Faster Geo service and faster localized fundraising banner rendering. The Geo service was moved from geiplookup.wikimedia.org to /geoiplookup on each wiki. The service was later removed entirely, in favor of an even faster zero-roundtrip solution (0-RTT): An edge-injected cookie within the Wikimedia CDN (T100902, patch). This transfers the information directly alongside the pageview without the delay of a JavaScript payload requesting it after the fact.

Could HTTP/2 be slower than HTTP/1?

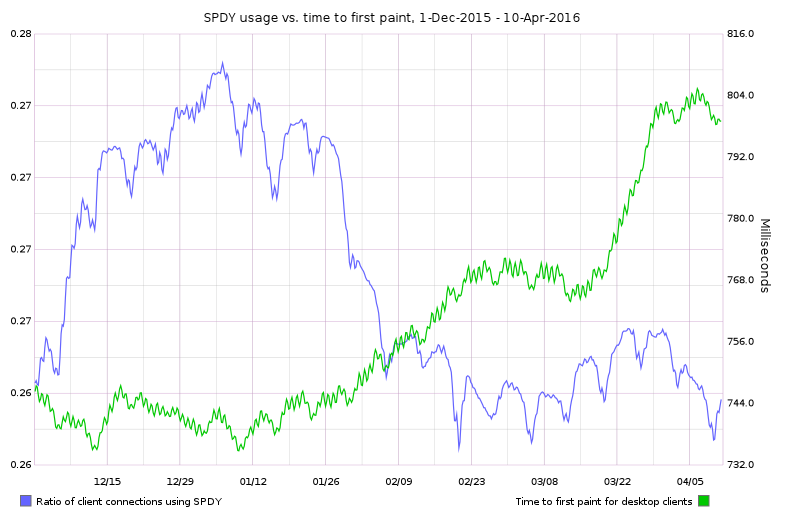

During the SPDY experiment, Peter Hedenskog noticed early on that SPDY and HTTP/2 have a very real risk of being slower than HTTP/1. We observed this through our synthetic testing infrastructure.

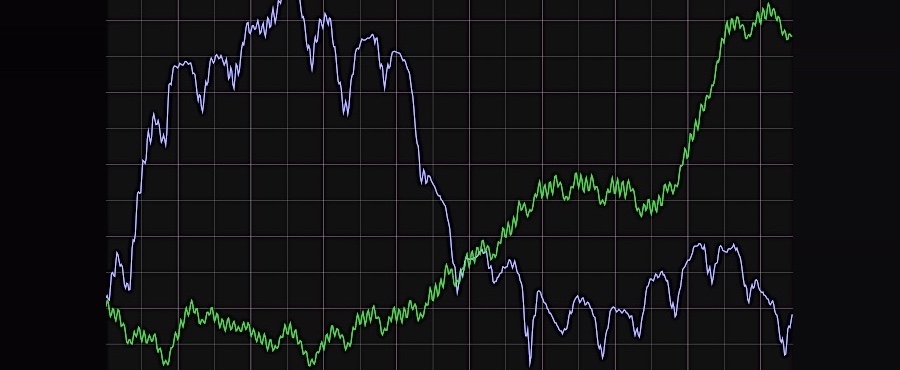

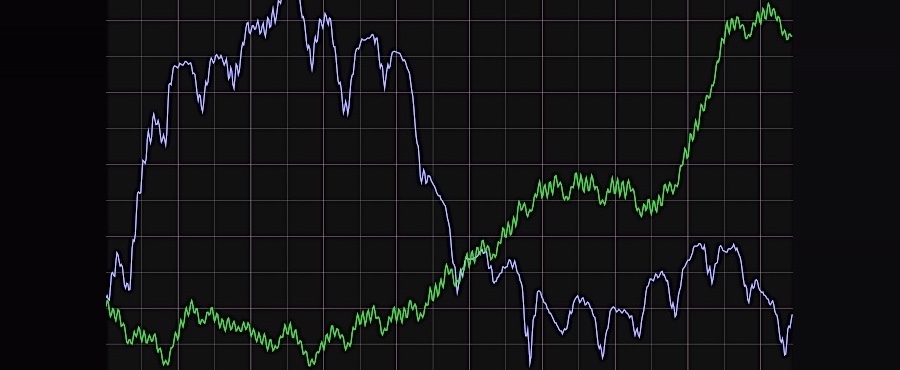

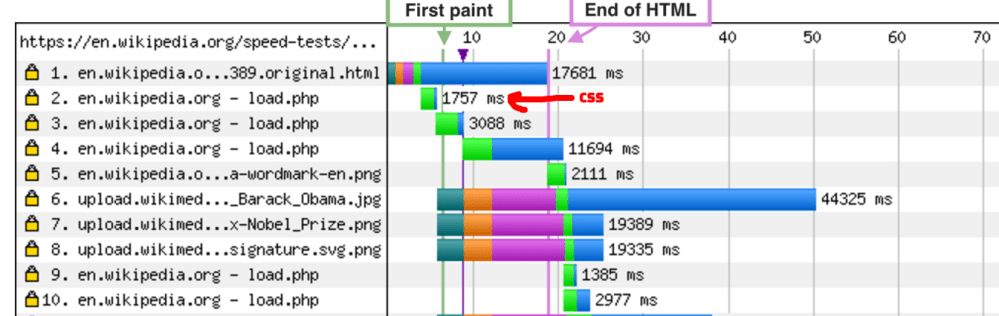

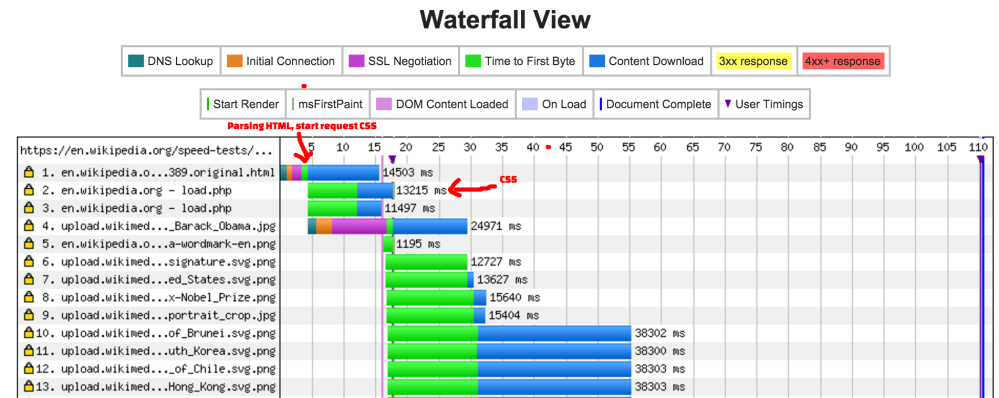

In HTTP/1, all resources are considered equal. When your browser navigates to an article, it creates a dedicated connection and starts downloading HTML from the server. The browser streams, parses, and renders in real-time as each chunk arrives. The browser creates additional connections to fetch stylesheets and images when it encounters references to them. For a typical article, MediaWiki’s stylesheets are notably smaller than the body content. This means, despite naturally being discovered from within (and thus after the start of) the HTML download, the CSS download generally finishes first, while chunks from the HTML continue to trickle in. This is good, because it means we can achieve the First Paint and Visually Complete milestones (above-the-fold) on page views before the HTML has fully downloaded in the background.

In HTTP/2, the browser assigns a bandwidth priority to each resource, and resources share a single connection. This is different from HTTP/1, where each resource has its own connection, with lower-level networks and routers dividing their bandwidth equally as two seemingly unrelated connections. During the time where HTML and CSS downloads overlap, HTTP/1 connections each enjoyed about half the available bandwidth. This was enough for the CSS to slip through without any apparent delay. With HTTP/2, we observed that Chrome was not getting any CSS response until after the HTML was mostly done.

This HTTP/2 feature can solve a similar issue in reverse. If a webpage suffers from large amounts of JavaScript code and below-the-fold images being downloaded during the page load, under HTTP1 those low-priority resources would compete for bandwidth and starve the critical HTML and CSS downloads. The HTTP/2 priority system allows the browser and server to agree, and give more bandwidth to the important resources first. A bug in Chrome caused CSS to effectively have a lower priority relative to HTML (chromium #586938).

We confirmed the hypothesis by disabling SPDY support on the Wikimedia CDN for a week (T125979). After Chrome resolved the bug, we transitioned from SPDY to HTTP/2 (T166129, T193221). This transition saw improvements both to how web browsers give signals to the server, and the way Nginx handled those signals.

As it stands today, page load time is overall faster on HTTP/2, and the CSS once again often finishes before the HTML. Thus, we achieve the same great early First Paint and Visually Complete milestones that we were used to from HTTP/1. But, we do still see edge cases where HTTP/2 is sometimes not able to re-negotiate priorities quick enough, causing CSS to needlessly be held back by HTML chunks that have already filled up the network pipes for that connection (chromium #849106, still unresolved as of this writing).

Lessons learned

These difficulties in controlling bandwidth prioritization taught us that domain consolidation isn’t a cure-all. We decided to keep operating our thumbnail service at upload.wikimedia.org through a dedicated IP and thus a dedicated connection, for now (T116132).

Browsers may reuse connections for multiple domains if an existing HTTPS connection carries a TLS certificate that includes the other domain in its SNI information, even when this connection is for a domain that corresponds to a different IP address in DNS. Under certain conditions, this can lead to a surprising HTTP 404 error (T207340, mozilla #1363451, mozilla #1222136). Emanuele Rocca from SRE Traffic Team mitigated this by implementing HTTP 421 response codes in compliance with the spec. This way, visitors affected by non-compliant browsers and middleware will automatically recover and reconnect accordingly.

Further reading

- When Network is Faster than Cache, Simon Hearne, 2020.