Abstract

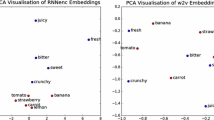

Consider a continuous word embedding model. Usually, the cosines between word vectors are used as a measure of similarity of words. These cosines do not change under orthogonal transformations of the embedding space. We demonstrate that, using some canonical orthogonal transformations from SVD, it is possible both to increase the meaning of some components and to make the components more stable under re-learning. We study the interpretability of components for publicly available models for the Russian language (RusVectōrēs, fastText, RDT).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

With numpy.linalg.svd it took up to several minutes for 100 K vocabulary.

- 2.

- 3.

- 4.

References

Aletras, N., Stevenson, M.: Evaluating topic coherence using distributional semantics. In: Proceedings of IWCS 2013, pp. 13–22 (2013)

Andrews, M.: Compressing word embeddings. In: Hirose, A., Ozawa, S., Doya, K., Ikeda, K., Lee, M., Liu, D. (eds.) ICONIP 2016. LNCS, vol. 9950, pp. 413–422. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46681-1_50

Arefyev, N., Panchenko, A., Lukanin, A., Lesota, O., Romanov, P.: Evaluating three corpus-based semantic similarity systems for Russian. In: Dialogue (2015)

Arora, S., Liang, Y., Ma, T.: A simple but tough-to-beat baseline for sentence embeddings. In: ICLR (2017)

Bojanowski, P., Grave, E., Joulin, A., Mikolov, T.: Enriching word vectors with subword information. arXiv:1607.04606 (2016)

Chang, J., Boyd-Graber, J.L., Gerrish, S., Wang, C., Blei, D.M.: Reading tea leaves: how humans interpret topic models. In: Nips, vol. 31, pp. 1–9 (2009)

Cotterell, R., Poliak, A., Van Durme, B., Eisner, J.: Explaining and generalizing skip-gram through exponential family principal component analysis. In: EACL 2017, p. 175 (2017)

Dhillon, P.S., Foster, D.P., Ungar, L.H.: Eigenwords: spectral word embeddings. J. Mach. Learn. Res. 16, 3035–3078 (2015)

Fonarev, A., Hrinchuk, O., Gusev, G., Serdyukov, P., Oseledets, I.: Riemannian optimization for skip-gram negative sampling. arXiv:1704.08059 (2017)

Gladkova, A., Drozd, A., Center, C.: Intrinsic evaluations of word embeddings: what can we do better? In: 1st Workshop on Evaluating Vector Space Representations for NLP, pp. 36–42 (2016)

Harris, Z.S.: Distributional structure. Word 10(2–3), 146–162 (1954)

Jang, K.R., Myaeng, S.H.: Elucidating conceptual properties from word embeddings. In: SENSE 2017, pp. 91–96 (2017)

Jolliffe, I.: Principal component analysis. Wiley Online Library (2002)

Kutuzov, A., Andreev, I.: Texts in, meaning out: neural language models in semantic similarity tasks for Russian. Komp’juternaja Lingvistika i Intellektual’nye Tehnologii 2(14), 133–144 (2015)

Kutuzov, A., Kuzmenko, E.: Comparing neural lexical models of a classic national corpus and a web corpus: the case for Russian. In: Gelbukh, A. (ed.) CICLing 2015. LNCS, vol. 9041, pp. 47–58. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-18111-0_4

Kutuzov, A., Kuzmenko, E.: WebVectors: a toolkit for building web interfaces for vector semantic models. In: Ignatov, D.I., Khachay, M.Y., Labunets, V.G., Loukachevitch, N., Nikolenko, S.I., Panchenko, A., Savchenko, A.V., Vorontsov, K. (eds.) AIST 2016. CCIS, vol. 661, pp. 155–161. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-52920-2_15

Landauer, T.K., Dumais, S.T.: A solution to plato’s problem: the latent semantic analysis theory of acquisition, induction, and representation of knowledge. Psychol. Rev. 104(2), 211 (1997)

Lau, J.H., Newman, D., Baldwin, T.: Machine reading tea leaves: automatically evaluating topic coherence and topic model quality. In: EACL, pp. 530–539 (2014)

Levy, O., Goldberg, Y.: Neural word embedding as implicit matrix factorization. In: Advances in Neural Information Processing Systems, pp. 2177–2185 (2014)

Levy, O., Goldberg, Y., Ramat-Gan, I.: Linguistic regularities in sparse and explicit word representations. In: CoNLL, pp. 171–180 (2014)

Luo, H., Liu, Z., Luan, H.B., Sun, M.: Online learning of interpretable word embeddings. In: EMNLP, pp. 1687–1692 (2015)

Mikolov, T., Chen, K., Corrado, G., Dean, J.: Efficient estimation of word representations in vector space. arXiv:1301.3781 (2013)

Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. In: Advances in Neural Information Processing Systems, pp. 3111–3119 (2013)

Mu, J., Bhat, S., Viswanath, P.: All-but-the-top: simple and effective postprocessing for word representations. arXiv:1702.01417 (2017)

Murphy, B., Talukdar, P.P., Mitchell, T.: Learning effective and interpretable semantic models using non-negative sparse embedding. In: COLING 2012 (2012)

Newman, D., Lau, J.H., Grieser, K., Baldwin, T.: Automatic evaluation of topic coherence. In: NACL, pp. 100–108. ACL (2010)

Nikolenko, S.I.: Topic quality metrics based on distributed word representations. In: Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1029–1032. ACM (2016)

Panchenko, A., Loukachevitch, N.V., Ustalov, D., Paperno, D., Meyer, C.M., Konstantinova, N.: Russe: the first workshop on Russian semantic similarity. In: Dialogue, vol. 2, pp. 89–105 (2015)

Panchenko, A., Ustalov, D., Arefyev, N., Paperno, D., Konstantinova, N., Loukachevitch, N., Biemann, C.: Human and machine judgements for Russian semantic relatedness. In: Ignatov, D.I., Khachay, M.Y., Labunets, V.G., Loukachevitch, N., Nikolenko, S.I., Panchenko, A., Savchenko, A.V., Vorontsov, K. (eds.) AIST 2016. CCIS, vol. 661, pp. 221–235. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-52920-2_21

Ramrakhiyani, N., Pawar, S., Hingmire, S., Palshikar, G.K.: Measuring topic coherence through optimal word buckets. In: EACL 2017, pp. 437–442 (2017)

Rothe, S., Schütze, H.: Word embedding calculus in meaningful ultradense subspaces. In: Proceedings of ACL, p. 512 (2016)

Ruseti, S., Rebedea, T., Trausan-Matu, S.: Using embedding masks for word categorization. In: 1st Workshop on Representation Learning for NLP, pp. 201–205 (2016)

Smith, S.L., Turban, D.H., Hamblin, S., Hammerla, N.Y.: Offline bilingual word vectors, orthogonal transformations and the inverted softmax. arXiv:1702.03859 (2017)

Tsvetkov, Y., Faruqui, M., Dyer, C.: Correlation-based intrinsic evaluation of word vector representations. In: 1st Workshop on Evaluating Vector Space Representations for NLP, pp. 111–115 (2016)

Acknowledgements

The author is grateful to Mikhail Dektyarev, Mikhail Nokel, Anna Potapenko and Daniil Tararukhin for valuable and fruitful discussions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendix

Top/bottom words for the first few principal components for different Russian models

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this paper

Cite this paper

Zobnin, A. (2018). Rotations and Interpretability of Word Embeddings: The Case of the Russian Language. In: van der Aalst, W., et al. Analysis of Images, Social Networks and Texts. AIST 2017. Lecture Notes in Computer Science(), vol 10716. Springer, Cham. https://doi.org/10.1007/978-3-319-73013-4_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-73013-4_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-73012-7

Online ISBN: 978-3-319-73013-4

eBook Packages: Computer ScienceComputer Science (R0)